16 April 2024

Peter Czanik

When it comes to sudo logging, pretty is not always better

16 April 2024

Peter Czanik

Working with sudo’s json_compact logs in syslog-ng

16 April 2024

Marcin Juszkiewicz

ConfigurationManager in EDK2: just say no

During my work on SBSA Reference Platform I have spent lot of time in firmware’s code. Which mostly meant Tianocore EDK2 as Trusted Firmware is quite small.

Writing all those ACPI tables by hand takes time. So I checked ConfigurationManager component which can do it for me.

Introduction

In 2018 Sami Mujawar from Arm contributed Dynamic Tables Framework to Tianocore EDK2 project. The goal was to have code which generates all ACPI tables from all those data structs describing hardware which EDK2 already has.

In 2023 I was writing code for IORT and GTDT tables to generate them from C. And started wondering about use of ConfigurationManager.

Mailed edk2-devel ML for pointers, documentation, hints. Got nothing in return, idea went to the shelf.

SBSA-Ref and multiple PCI Express buses

Last week I got SBSA-Ref system booting in NUMA configuration with three separate PCI Express buses. And started working on getting EDK2 firmware to recognize them as such.

Took me a day and pci command listed cards properly:

Shell> pci

Seg Bus Dev Func

--- --- --- ----

00 00 00 00 ==> Bridge Device - Host/PCI bridge

Vendor 1B36 Device 0008 Prog Interface 0

00 00 01 00 ==> Network Controller - Ethernet controller

Vendor 8086 Device 10D3 Prog Interface 0

00 00 02 00 ==> Bridge Device - PCI/PCI bridge

Vendor 1B36 Device 000C Prog Interface 0

00 00 03 00 ==> Bridge Device - Host/PCI bridge

Vendor 1B36 Device 000B Prog Interface 0

00 00 04 00 ==> Bridge Device - Host/PCI bridge

Vendor 1B36 Device 000B Prog Interface 0

00 01 00 00 ==> Mass Storage Controller - Non-volatile memory subsystem

Vendor 1B36 Device 0010 Prog Interface 2

00 40 00 00 ==> Bridge Device - PCI/PCI bridge

Vendor 1B36 Device 000C Prog Interface 0

00 40 01 00 ==> Bridge Device - PCI/PCI bridge

Vendor 1B36 Device 000C Prog Interface 0

00 41 00 00 ==> Base System Peripherals - SD Host controller

Vendor 1B36 Device 0007 Prog Interface 1

00 42 00 00 ==> Display Controller - Other display controller

Vendor 1234 Device 1111 Prog Interface 0

00 80 00 00 ==> Bridge Device - PCI/PCI bridge

Vendor 1B36 Device 000E Prog Interface 0

00 80 01 00 ==> Bridge Device - PCI/PCI bridge

Vendor 1B36 Device 000C Prog Interface 0

00 81 09 00 ==> Multimedia Device - Audio device

Vendor 1274 Device 5000 Prog Interface 0

00 81 10 00 ==> Network Controller - Ethernet controller

Vendor 8086 Device 100E Prog Interface 0

00 82 00 00 ==> Mass Storage Controller - Serial ATA controller

Vendor 8086 Device 2922 Prog Interface 1

Three buses are: 0x00, 0x40 and 0x80. But then I had to tell Operating System about those. Which meant playing with ACPI tables code in C.

So idea came “what about trying ConfigurationManager?”.

Another try

Mailed edk2-devel ML again for pointers, documentation hints. And then looked at code written for N1SDP and started playing with ConfigurationManager…

ConfigurationManager.c has EDKII_PLATFORM_REPOSITORY_INFO struct with hundreds of lines of data (as another structs). From listing which ACPI tables I want to have (FADT, GTDT, APIC, SPCR, DBG2, IORT, MCFG, SRAT, DSDT, PPTT etc.) to listing all hardware details like GIC, PCIe, Timers, CPU and Memory information.

Then code for querying this struct. I thought that CM/DT (ConfigurationManager/DynamicTables) framework will have those already in EDK2 code but no — each platform has own set of functions. Another hundreds of lines to maintain.

Took some time to get it built, then started filling proper data and compared with ACPI tables I had previously. There were differences to sort out. But digging more and more into code I saw that I go deeper and deeper into rabbit hole…

Dynamic systems do not fit CM?

For platforms with dynamic hardware configuration (like SBSA-Ref) I needed to write code which would populate that struct with data on runtime. Check amount of cpu cores and write cpu information (with topology, cache etc), create all GIC structures and mappings. Then same for PCIe buses. Etc. Etc. etc…

STATIC

EFI_STATUS

EFIAPI

InitializePlatformRepository (

IN EDKII_PLATFORM_REPOSITORY_INFO * CONST PlatRepoInfo

)

{

GicInfo GicInfo;

CM_ARM_GIC_REDIST_INFO *GicRedistInfo;

CM_ARM_GIC_ITS_INFO *GicItsInfo;

CM_ARM_SMMUV3_NODE *SmmuV3Info;

GetGicDetails(&GicInfo);

PlatRepoInfo->GicDInfo.PhysicalBaseAddress = GicInfo.DistributorBase;

GicRedistInfo = &PlatRepoInfo->GicRedistInfo[0];

GicRedistInfo->DiscoveryRangeBaseAddress = GicInfo.RedistributorsBase;

GicItsInfo = &PlatRepoInfo->GicItsInfo[0];

GicItsInfo->PhysicalBaseAddress = GicInfo.ItsBase;

SmmuV3Info = &PlatRepoInfo->SmmuV3Info[0];

SmmuV3Info->BaseAddress = PcdGet64 (PcdSmmuBase);

return EFI_SUCCESS;

}

Which in my case can mean even more code written to populate CM struct of structs than it would take to generate ACPI tables by hand.

Summary

ConfigurationManager and DynamicTables frameworks look tempting. There may be systems where it can be used with success. I know that I do not want to touch it again. All those structs of structs may look good for someone familiar with LISP or JSON but not for me.

08 April 2024

Peter Czanik

Centralized system and LSF logging on a Turing Pi system

04 April 2024

Marcin Juszkiewicz

DT-free EDK2 on SBSA Reference Platform

During last weeks we worked on getting rid of DeviceTree from EDK2 on SBSA Reference Platform. And finally we managed!

All code is merged into upstream EDK2 repository.

What?

Someone may wonder where DeviceTree was in SBSA Reference Platform. Wasn’t it UEFI and ACPI platform?

Yes, from Operating System point of view it is UEFI and ACPI. But if you look deeper you will see DeviceTree hidden inside our chain of software components:

/dts-v1/;

/ {

machine-version-minor = <0x03>;

machine-version-major = <0x00>;

#size-cells = <0x02>;

#address-cells = <0x02>;

compatible = "linux,sbsa-ref";

chosen {

};

memory@10000000000 {

reg = <0x100 0x00 0x00 0x40000000>;

device_type = "memory";

};

intc {

reg = <0x00 0x40060000 0x00 0x10000

0x00 0x40080000 0x00 0x4000000>;

its {

reg = <0x00 0x44081000 0x00 0x20000>;

};

};

cpus {

#size-cells = <0x00>;

#address-cells = <0x02>;

cpu@0 {

reg = <0x00 0x00>;

};

cpu@1 {

reg = <0x00 0x01>;

};

cpu@2 {

reg = <0x00 0x02>;

};

cpu@3 {

reg = <0x00 0x03>;

};

};

};

It is very minimal one, providing us with only those information we need. It does not even pass any compliance checks. For example for Linux, GIC node (/intc/ one) should have gazillion of fields, but we only need addresses.

Trusted Firmware reads it, parses and provides information from it via Secure Monitor Calls (SMC) to upper firmware level (EDK2 in our case). DeviceTree is provided too but we do not read it any more.

Why?

Our goal is to treat software components a bit different then people may expect. QEMU is “virtual hardware” layer, TF-A provides interface to “embedded controller” (EC) layer and EDK2 is firmware layer on top.

On physical hardware firmware assumes some parts and asks EC for the rest of system information. QEMU does not give us that, while giving us a way to alter system configuration more than it would be possible on most of hardware platforms using a bunch of cli arguments.

EDK2 asks for CPU, GIC and Memory. When there is no info about processors or memory, it informs the user and shutdowns the system (such situation does not have a chance of happening but it works as an example).

Bonus stuff: NUMA

Bonus part of this work was adding firmware support for NUMA configuration. When QEMU is run with NUMA arguments then operating system gets whole memory and proper configuration information.

QEMU arguments used:

-smp 4,sockets=4,maxcpus=4

-m 4G,slots=2,maxmem=5G

-object memory-backend-ram,size=1G,id=m0

-object memory-backend-ram,size=3G,id=m1

-numa node,nodeid=0,cpus=0-1,memdev=m0

-numa node,nodeid=1,cpus=2,memdev=m1

-numa node,nodeid=2,cpus=3

How Operating System sees NUMA information:

root@sbsa-ref:~# numactl --hardware

available: 3 nodes (0-2)

node 0 cpus: 0 1

node 0 size: 975 MB

node 0 free: 840 MB

node 1 cpus: 2

node 1 size: 2950 MB

node 1 free: 2909 MB

node 2 cpus: 3

node 2 size: 0 MB

node 2 free: 0 MB

node distances:

node 0 1 2

0: 10 20 20

1: 20 10 20

2: 20 20 10

What next?

There is CPU topology information in review queue. All those sockets, clusters, cores and threads. QEMU will pass it in DeviceTree, TF-A will give it via SMC and then EDK2 will put it in one of ACPI tables (PPTT == Processor Properties Topology Table).

If someone decide to write own firmware for SBSA Reference Platform (like port of U-Boot) then both DeviceTree and set of SMC calls will wait for them, ready to be used to gather hardware information.

02 April 2024

Peter Czanik

The syslog-ng health check

01 April 2024

Marcin Juszkiewicz

Remote BorgBackup machine

Over three years ago I moved to using BorgBackup to keep my data save on other machines. Due to recent datacenter changes I needed to create a new remote space for my data.

Idea

The idea was simple: take some small computer, put some disk inside, install Debian and boot. And wait for incoming connections and store data for future.

Hardware selection

But then what kind of hardware to use? Many people would expect me to use something Arm based. Maybe Espressobin or RockPro64?

I have to admit: I though about them for a moment. And rejected both. First one stopped booting some time ago (no idea why), second one would require creating of case, checking does it boot etc.

Instead I used system which just got freed from duties: Fujitsu S920 thin terminal. I paid about 30-40€ for it a few months ago.

I took 1TB hard drive from a drawer (with just 100 days of use) for data, installed Debian stable on internal 120GB mSata drive and started setup.

Ansible

Some time ago I was reviewing a book for my friends: The Linux DevOps Handbook. So decided to use Ansible this time instead of doing things by hand. As this way I have playbooks to prepare another such system in case of need.

Some vault related issues later I had system setup the way I wanted, required packages installed and could start preparing it for being BorgBackup remote machine.

There are some example roles for it in BorgBackup documentation. I took those, adapted to my needs and then first backups were done.

Future steps

I have some work to finish still. For example I want system to send me an email each time backup will be done with information which system sent data, how much time and space it took and how much space left on internal drive.

And have to transfer machine to some remote location because now it is on a shelf at my home ;D

27 March 2024

Peter Czanik

Alerting on One Identity Cloud PAM Essentials logs using syslog-ng

25 March 2024

Marcin Juszkiewicz

Running SBSA Reference Platform

Recently people asked me how to run SBSA Reference Platform for their own testing and development. Which shows that I should write some documentation.

But first let me blog about it…

Requirements

To run SBSA Reference Platform emulation you need:

- QEMU (8.2+ recommended)

- EDK2 firmware files

That’s all. Sure, some hardware resources would be handy but everyone has some kind of computer available, right?

QEMU

Nothing special is required as long as you have qemu-system-aarch64 binary available.

EDK2

We provide EDK2 binaries on CodeLinaro server. Go to “latest/edk2” directory, fetch both “SBSA_FLASH*” files, unpack them and you are ready to go. You may compare checksums (before unpacking) with values present in “latest/README.txt” file.

Those binaries are built from release versions of Trusted Firmware (TF-A) and Tianocore EDK2 plus latest “edk2-platforms” code (as this repo is not using tags).

Building EDK2

If you decide to build EDK2 on your own then we provide TF-A binaries in “edk2-non-osi” repository. I update those when it is needed.

Instructions to build EDK2 are provided in Qemu/SbsaQemu directory of “edk2-platforms” repository.

Running SBSA Reference Platform emulation

Note that this machine is fully emulated. Even on AArch64 systems where virtualization is available.

Let go through example QEMU command line:

qemu-system-aarch64

-machine sbsa-ref

-drive file=firmware/SBSA_FLASH0.fd,format=raw,if=pflash

-drive file=firmware/SBSA_FLASH1.fd,format=raw,if=pflash

-serial stdio

-device usb-kbd

-device usb-tablet

-cdrom disks/alpine-standard-3.19.1-aarch64.iso

At first we select “sbsa-ref” machine (defaults to four Neoverse-N1 cpu cores and 1GB of ram). Then we point to firmware files (order of them is important).

Serial console is useful for diagnostic output, just remember to not press Ctrl-C there unless you want to take whole emulation down.

USB devices are to have working keyboard and pointing device. USB tablet is more

useful that USB mouse (-device usb-mouse adds it). If you want to run *BSD

operating systems then I recommend to add USB mouse.

And the last entry adds Alpine 3.19.1 ISO image.

System boots to text console on graphical output. For some reason boot console is on serial port.

Adding hard disk

If you want to add hard disk then adding “-hdb disk.img” is enough (“hdb” as

cdrom took 1st slot on the AHCI controller).

Handy thing is “virtual FAT drive” which allows to create guest’s drive from directory on the host:

-drive if=ide,file=fat:ro:DIRECTORY_ON_HOST,format=raw

This is useful for running EFI binaries as this drive is visible in UEFI environment. It is not present when operating system is booted.

Adding NVME drive

NVME is composed from two things:

- PCIe device

- storage drive

So let add it in a nice way, using PCIe root-port:

-device pcie-root-port,id=root_port_for_nvme1,chassis=2,slot=0

-device nvme,serial=deadbeef,bus=root_port_for_nvme1,drive=nvme

-drive file=disks/nvme.img,format=raw,id=nvme,if=none

Using NUMA configuration

QEMU can emulate Non-Uniform Memory Access (NUMA) setup. This usually means multisocket systems with memory available per cpu socket.

Example config:

-smp 4,sockets=4,maxcpus=4

-m 4G,slots=2,maxmem=5G

-object memory-backend-ram,size=1G,id=m0

-object memory-backend-ram,size=3G,id=m1

-numa node,nodeid=0,cpus=0-1,memdev=m0

-numa node,nodeid=1,cpus=2,memdev=m1

-numa node,nodeid=2,cpus=3

This adds four cpu sockets and 4GB of memory. First node has 2 cpu cores and 1GB ram, second node has 1 cpu and 3GB of ram and last node has 1 cpu without local memory.

Note that support for such setup in work in progress now (March 2024). We merged required code into TF-A and have set of patches for EDK2 in review. Without them you will see resources only from the first NUMA node.

Complex PCI Express setup

Our platform has GIC ITS support so we can try some complex PCI Express structures.

This example uses PCIe switch to add more PCIe slots and then (to complicate things) puts PCIe-to-PCI bridge into one of them to make use of old Intel e1000 network card:

-device pcie-root-port,id=root_port_for_switch1,chassis=0,slot=0

-device x3130-upstream,id=up_port1,bus=root_port_for_switch1

-device xio3130-downstream,id=down_port1,bus=up_port1,chassis=1,slot=0

-device ac97,bus=down_port1

-device xio3130-downstream,id=down_port2,bus=up_port1,chassis=1,slot=1

-device pcie-pci-bridge,id=pci1,bus=down_port2

-device e1000,bus=pci1,addr=2

Some helper scripts

During last year I wrote some helper scripts for working with SBSA Reference Platform testing. They are stored in sbsa-ref-status repository on GitHub.

May lack up-to-date documentation but can show my way of using the platform.

Summary

SBSA Reference Platform can be used for testing several things. From operating systems to (S)BSA compliance of the platform. Or to check how some things are emulated in QEMU. Or playing with PCIe setups (NUMA systems can have separate PCI Express buses but we do not handle it yet in firmware).

Have fun!

19 March 2024

Peter Czanik

Collecting One Identity Cloud PAM Essentials logs using syslog-ng

14 March 2024

Peter Czanik

The syslog-ng Insider 2024-03: MacOS; OpenTelemetry;

05 March 2024

Peter Czanik

Dedicated Windows XML eventlog parser in syslog-ng

25 February 2024

Marcin Juszkiewicz

Good keyboard is a must

I use Microsoft Ergonomic Desktop 4000 keyboard for over 10 years now. And Microsoft Natural Keyboard before.

All because wrists and RSI…

RSI started

In 2005 I started to have RSI problems. At some moment I was wearing two wrist braces which made my life really problematic.

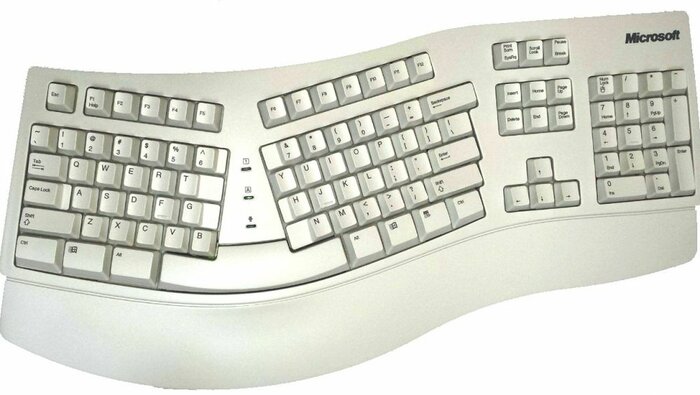

Solution was quite simple: buying ergonomic keyboard. Which at that time meant Microsoft Natural Keyboard:

Soon I had two of them — one at home, one at work and problem was gone.

Keyboard upgrade

Time passed and one of keyboards started to malfunction. I looked at available options and bought this (photo shows current state):

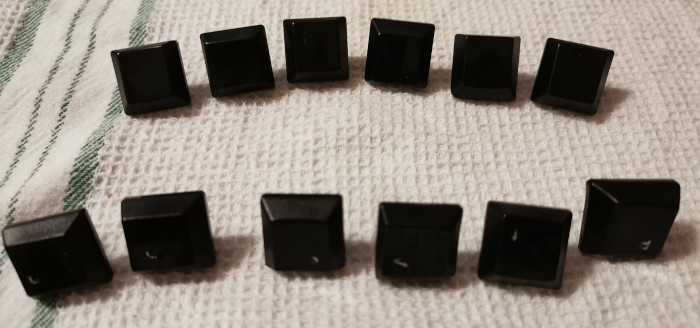

Used it for years. And it was more and more visible. Keycaps started to shine, wrist pads started to disintegrate. Some keys lost markings completely:

Yet it was still working and was fine as I do not look at keys when I type.

Repairs

One day I found broken one on auction site. Looked much nicer than mine so I bought it. Took me some time to disassemble both (lot of screws).

After 2-3 hours of work my keyboard was clean. I managed to replace most of keys (some had to stay as layout was a bit different) and got wrist pads in excellent condition.

Week later, while visiting local DIY store, I found another broken one. Someone put it into e-waste trashcan. Excellent condition and even same layout as the one I was using. So I took it home to have another source of spare parts.

Going mechanical?

In 2023 I started to thinking about moving to mechanical keyboard. The problem was “which one” as there was not so big choice of options.

Alice/Arise layout got quite popular in mechanical keyboard communities. But they are flat while MS 4000 is curved (middle of keyboard is higher than sides).

Periboard 535

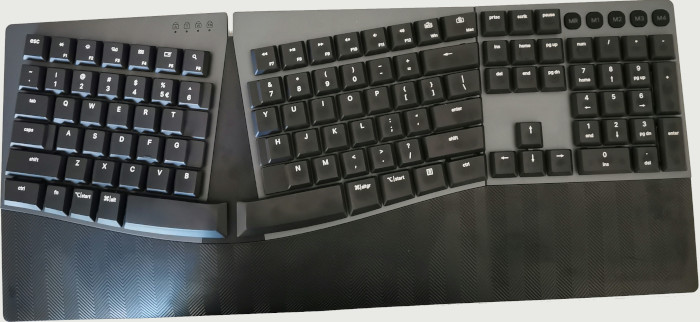

But there was one keyboard which was getting popular: Periboard 535:

It looked as something between MS 4000 and Arise. Some of my friends already used it and recommended it to me.

So I bought it on Amazon DE. Keyboard arrived, I unpacked it and connected. One hour of use later I packed it back and made a break from using computer for most of my day.

My left hand was in pain. Hard plastic wrist pads were disaster. Too short for my hands so it was pressing bottom edge of my palm. Complete disaster.

Sent it back, got my money back.

Let do something DIY?

Sure, Periboard was painful but at same time it was nice to type on. So I decided to take a look at my collection of MS 4000 keyboards.

At that time I had one working one and three dead ones. Matrix foil, electronics or other reasons. Decided to sacrifice one and try to replace keys with some clones of Cherry MX ones.

The goal is to make it cheap way. Will use Redragon switches and noname keycaps. Both bought on Aliexpress for less than 50€ in total.

You may track my progress on Mastodon under #ms4kmech tag.

Current collection

In meantime I got two more working MS 4000 keyboards from my friends. So current state looks like this:

Left side are dead keyboards, right side are working ones. Bottom left is the one where I do some experiments with mechanical switches. Bottom right is the one I am using daily.

Have at least three different layouts (the one without keys was one of EU national ones). Two versions of ‘Start’ key, “health warning” at the bottom case is either in Polish or English. Some have manufacture date printed on label, some does not (it is present on plastics inside).

Summary

Good keyboard is a must. I am unable to work on normal keyboards any more. Laptop ones are kind of fine but still I use them mostly during travels to conferences when I do not spend much time with keyboards.

And I hope that #ms4kmech project will end with something usable ;D

12 February 2024

Marcin Juszkiewicz

Twenty years of my work with Arm architecture

Twenty years ago I bought Sharp Zaurus SL-5500 Linux PDA. With StrongARM SA1110 inside. And I had no idea how it will end…

Hobby

At first it was a hobby. Finding what I can do with it (compared to previous devices running PalmOS) was fun. SharpROM, OpenZaurus 3.2, OpenZaurus 3.3-pre1 etc. New apps, taskbar plugins…

Then I wanted to build something. Found an OpenZaurus toolchain, tried it and went for help. And got “forget this toolchain, we have a new toy: OpenEmbedded”.

And I sunk in…

Took me some time to get familiar with that (lot of n00b questions asked). Then many attempts to get a bootable image. Turned out that my image was one of first properly booting on a SL-5500 device. So I got write access to OpenEmbedded with “now you can merge all of it” message.

OpenZaurus

I spent three years working on OpenZaurus distribution. In my free time. Started as a user, went through being developer and ended as a release manager. Through those years I moved from my SL-5500 to the c760 (donated by user). Had several other Zaurus models on my desk in meantime.

Those were interesting years. I learnt a lot about structure of Linux filesystem, how packaging works, all those differences between build-time and install-time dependencies, sorting out upgrades etc. And how to cooperate with other developers and users. Also how to avoid those who deserve to be ignored.

Embedded Linux developer

At one moment working with Arm architecture stopped being hobby and became daily work.

Matthew Allum from OpenedHand proposed me a full time contract so I left my daily job (as a PHP programmer) and started working on Poky Linux and its version of OpenEmbedded.

New devices, new interesting challenges, new co-workers and customers. Proper development boards (those big ones with lot of interesting connectors for even more expensive equipment), prototypes of misc devices…

After OpenedHand there were other companies interested in my services. Bug Labs with their Java based system on top of Poky Linux, Vernier with school palmtops and others. Still embedded Linux.

Invited speaker

My first conference was FOSDEM 2007. And then were several other ones but I was an attendee rather than speaker.

2009 changed it — Andrea Gallo from ST-Ericsson invited me to give a talk at their workshop in Grenoble. My presentation was about supporting their developer board NHK-15 in Poky Linux and OpenEmbedded.

Next day was ELCE where I gave a talk called “Hacking with OpenEmbedded”. It was also conference where I met Leif Lindholm and Jon Masters for the first time.

Linaro

2010 came, I had over three years of paid embedded Linux work behind me. Canonical asked and we signed papers. Then another papers and I became one of the first 20 people working at Linaro. To work on improving Arm support in Linux.

It was time when working with Arm meant embedded work, just using main distributions instead of embedded ones. All those device specific kernels, images etc.

I did cross compilation toolchains for Debian/Ubuntu, AArch64 bring up using OpenEmbedded and several other tasks.

Red Hat

In 2013 I ended my contract with Canonical, left Linaro and joined Red Hat. And met the other side of Arm world.

I was not doing developer boards or SBC systems. The project was to get Red Hat Enterprise Linux distribution running on 64-bit Arm servers. Before they even existed…

It was a fun time. Native builds in very slow system emulators took hours/days. Prototype servers were faster but also very unstable.

Anyway, we delivered. RHEL 7.2 had AArch64 support. Countless packages got fixed both in RHEL and Fedora.

Linaro again

In 2016 I joined Linaro again (this time as Red Hat engineer). To work on Arm servers. For data centers and clouds.

Virtualization, OpenStack, Big Data, emulation of Arm servers etc.

Acknowledgements

There were many people who helped me on this journey.

First of all Anna Wagner-Juszkiewicz — without her patience and forcing me to create my own company I would not go that far.

Then OpenEmbedded team members:

- Philip Balister

- Phil Blundell

- Florian Boor

- Holger Freyther

- Koen Kooi

- Christopher Larson

- Mickey Lauer

- Richard Purdie

- Holger Schurig

- and countless contributors

Some work changes related people:

- Cliff Brake (my first freelance OE jobs)

- Tim Bird (CELF was my first customer)

- Matthew Allum (full time contract so I left web development)

- Christian Reis (Canonical/Linaro job)

- Jon Masters (“come, work at Red Hat with us”)

Linaro folks:

- Fathi Boudra

- Andrea Gallo

- Gema Gomez

- Lee Jones

- Leif Lindholm

- Sahaj Sarup

- Ebba Simpson

- Riku Voipio

- Linus Walleij

- Wookey

Of course I am unable to list everyone. During those twenty years I met many people, some became friends, some became adversaries. There are faces/names I remember (and more of those I should remember). And there are people who recognize me when I do not recognize them (sorry folks).

Hall of fame shelf

There is a plan to frame several devices from my past:

- Sharp Zaurus SL-5500 (from which all that started)

- Atmel AT91SAM9263EK (first developer board I owned)

- Applied Micro Mustang (first AArch64 server)

And who knows, maybe some other hardware but first would need to get it. Some already got recycled, some had to be destroyed.

Plans for next years?

I plan to continue working on Arm architecture. It brings me fun still.

And who knows, maybe one day some standards compliant AArch64 based system will replace my x86-64 desktop. All those UEFI, ACPI, SBSA, SBBR, SystemReady buzzwords in working piece of hardware. Just without spending thousands of €€€ for it.

11 January 2024

Marcin Juszkiewicz

2023 summary

In past years I was writing some kind of summary of previous year. In form of kind of timeline, with info about each month etc. Time to write something about previous year but in other form.

SBSA Reference Platform

At work my main project in 2023 was SBSA Reference Platform. In QEMU, Trusted Firmware (TF-A) and Tianocore EDK2 projects.

In May I gathered feedback from Leif, Shashi and others about how do we plan to version the platform. There were plans for upgrades but those had to be done in some sensible way.

More about it is in Versioning of sbsa-ref machine blog post. We managed to do several version bumps and finally got it done in proper way.

There was a time when wrong USB controller was given by firmware compared to what hardware had. And as it was on non-discoverable system bus some operating systems ignored it and worked (Linux) while some (BSD) complained and hang.

More about it in Testing *BSD post.

November brought TF-A 2.10 and EDK2 202311 releases which gave us official firmware for our platform. Where everything works as expected on all operating systems we checked (*BSD, Linux, MS Windows).

We are quite close to SBSA level 3 compliance.

Personal projects

software ones

My system calls table project got several updates as Linux kernel got some new entries. There were several code cleanups to not list calls which are preset in kernel but not implemented. Or were already removed and I missed it.

Also merged my Python system-calls project into above one. It made my life easier as they duplicated each other. Now script which generates my Linux kernel system calls for all architectures table uses Python class so I have less code to maintain.

More AArch64 SoCs appeared in AArch64 SoC features table. Nice to see that there are more and more Arm v8.2 SBCs and newer and newer cores appear in Android phones/tablets.

Wrote ArmCpuInfo application to be able to check which cpu features are supported from EFI shell level. Feedback from community was positive.

hardware ones

After several attempts I got ‘virtual kick in a butt’ and finally ordered new furniture to my kitchen. It just got mounted so I am now in a middle of handling changes but it will be nice improvement.

Other thing was move from Ikea Trådfri to HomeAssistant with Zigbee dongle. Lot of cursing later things (usually) work as intended. Some interesting upgrades done, some areas have different level of light depending on time of day/night. Some buttons switch lights in several areas at once.

Travels

2023 was Europe only. One of reasons was Linaro Connect which took place just once (and it will stay that way) and I did not attended any out-of-Europe conferences.

FOSDEM

Great as usual.

The Netherlands

February in the Netherlands was time nice spent. I took my daughter to visit (again) friends in Eindhoven. Carnival time was nice. All those dressed people…

While there we went to Amsterdam to visit Anne Frank House museum and it was time well spent. This place is “a must visit” if you are in area.

As our Museumcaards from our summer 2022 trip were still valid we went to NEMO science museum. “Would you like to see dead fetuses” was the question of a visit as there was an exhibition of human fetuses in formalin(?).

As we had some time between NEMO and Anne Frank museums we got some good ramen in same place as in 2022 (Vatten Ramen Zeedijk restaurant). After food we visited Koninklijk Paleis Amsterdam (Royal Palace). Interesting place. Especially for 0€ price ;D

Return trip involved visiting Warsaw so we met some friends there, visited Copernicus Science Centre and went back home.

Linaro Connect London

I was in London before so it was kind of ‘meh’ trip when it comes to sightseeing.

Was great to meet co-workers, people from all those projects where Linaro is involved and discuss random things with them. Discussed SBSA Reference Platform future steps with other developers.

And on way from hotel to airport I visited Imperial War Museum. It was interesting to look at World War I/II from other perspective. Have to visit similar museum in Germany.

Vacations

In Poland, by car. As usual. Met family (we are quite spread through the country), long-time-no-see friends and rested a bit.

Several museums again. There was small automotive one in Gdynia, large one in Babki Oleckie, one small one with American cars in Kościerzyna.

We saw some cemetery in a middle of a forest, visited church were The Holy Grail was present few centuries ago, some other old churches.

One day we went to see żubry. In English they are called “European bison” but we had discussion that maybe American bison should rather be called “American żubr” :D

Manga/anime/fantasy events

My daughter is manga/anime fan. So on her birthday I gave her 3rd book from 乙嫁語り (Otoyomegatari) series. In Japanese as one of my friends was in Tokyo, bought it and brought to FOSDEM. There was some laugh and book landed on shelf next to previous ones in Polish.

June meant Pyrkon (fantasy convent) in Poznań. I was tough due to climate conditions. Friends, talks, discussions, cosplayers… Nice time. The good thing is that in 2024 Mira will go there with her mother instead of me. I am tired of Pyrkon already ;D

In August we went for Mizukon convent in Przecław, close to Szczecin (public transport reachable). It was great event. Met some interesting people, had nice discussions, watched several talks. I even managed to win “Anime in 90s” quiz despite not watching anime at that time (did not had access to satellite tv).

October brought Japan Fest in Szczecin. I had a feeling that it was organized by mostly the same people as Mizukon. It was fun to see that people recognize me from previous event.

Summary

In the end it was quite nice year. There were tough times but amount of good ones was much higher. Looking forward to 2024 ;D

30 December 2023

Riku Voipio

Adguard DNS, or how to reduce ads without apps/extensions

What is DNS-over-TLS and DNS-over-HTTPS

Subverting private DNS for ad blocking

Other uses for AdGuard DNS

Going further

Epilogue

21 December 2023

Marcin Juszkiewicz

OPNsense is not for my router :(

For over two years my router is small x86-64 box running OpenWRT. But I have some issues with it.

Hardware info

I bought this PC on Aliexpress somewhere in 2021. It has Intel Celeron J3160 cpu, 2GB of ram and 4 Intel i211 network cards.

Can be upgraded to 8GB of ram. Can use mSata SSD, has space for 2.5” SATA drive, video outputs, usb ports…

And contrary to several Arm systems it can boot several operating systems out of the box.

Let’s try OPNsense!

OPNsense is FreeBSD based system for network routers. With web interface, packages, monitoring, firewalling etc. Just like OpenWRT but not based on Linux.

Fetched current version (23.7), wrote to usb thumbdrive and booted. It recognized all hardware etc. Took me a bit of time to get basic network running (PPPoE on igb0, LAN on igb1-3).

And then checked performance. Network was 300/300 Mbps on speedtest.net service. The problem is I have 1000/300 Mbps connection.

FreeBSD bug

There is a bug reported in FreeBSD for it:

[igb] PPPoE RX traffic is limited to one queue

On PPPoE interface packets are only received on one NIC driver queue (queue0). This is hurting system performance.

I read through it, took system tunables from comment #11 and rebooted. New values were used:

root@krzys:~ # sysctl -a | grep net.isr

net.isr.numthreads: 4

net.isr.maxprot: 16

net.isr.defaultqlimit: 256

net.isr.maxqlimit: 10240

net.isr.bindthreads: 1

net.isr.maxthreads: 4

net.isr.dispatch: deferred

Speedtest.net shown improvements. But still slow — only 470/300 Mbps.

Time to go back to OpenWRT?

Looks like I will go back to OpenWRT. It is not a perfect solution but works for me on this hardware.

I may not be a fan of how it upgrades packages and rootfs but I have some scripts to make it easier and in saner way.

One day will reconsider running Debian or Fedora on it. I had Debian based router in past and it was working fine.

10 November 2023

Gema Gomez

Sparkle And Shine - Quartz

Speeding up UEFI driver development

While working on the ChaosKey UEFI driver, I once again found myself in a situation of tedium: how to simplify driver development when the target for my device driver is firmware. Moving around a USB key with a FAT filesystem may be a completely valid thing to do in order to install a pre-existing driver. But when you are trying to learn how to write drivers for a new platform (and write blog posts about it), the modify -> compile -> copy -> reboot -> load -> fail -> try again cycle gets quite tedious. As usual, QEMU has/is the answer, letting you pass a USB device controlled by the host over to the guest.

Make sure your QEMU is built with libusb support

Unsurprisingly, in order to support passing through USB device access to guests, QEMU needs to know how to deal with USB - and it does this using libusb. Most Linux distributions package a version of QEMU with libusb support enabled, but if you are building your own version from source, make sure the output from configure contains libusb yes If it does not, under Debian, $ sudo apt-get build-dep qemu should do the trick - anywhere else make sure you manually install libusb v1.0 or later (Debian's libusb-dev package is v0.1, which will not work), with development headers.

Without libusb support, you'll get the error message 'usb-host' is not a valid device model name when following the below instructions.

Identifying your device

Once your QEMU is ready, in order to pass a device through to a guest, you must first locate it on the host.

$ lsusb

...

Bus 008 Device 002: ID 1d50:60c6 OpenMoko, Inc

Bus 008 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub

....

The vendor ID (1d50) of ChaosKey is taken from the OpenMoko project, which has repurposed their otherwise unused device registry space for various community/homebrew devices.

If you want to be able to access the device as non-root, ensure the device node under /dev/bus/usb/bus/device (in this case /dev/bus/usb/008/002) has suitable read-write access for your user.

Launching QEMU

Unlike the x86 default "pc" machine, QEMU has no default machine type for ARM. The "generic virtualized platform" type virt has no USB attached by default, so we need to manually add one. ich-usb-uhci1 supports high-speed, and so is needed for some devices to work under emulation. Using the "virtual FAT" support in QEMU is the easiest way to get a driver into UEFI without recompiling. The example below uses a subdirectory called fatdir which QEMU generates an emulated FAT filesystem from.

USB_HOST_BUS=8

USB_HOST_PORT=2

AARCH64_OPTS="-pflash QEMU_EFI.fd -cpu cortex-a57 -M virt -usb -device ich9-usb-uhci1"

X86_OPTS="-pflash OVMF.fd -usb"

COMMON_OPTS="-device usb-host,bus=usb-bus.0,hostbus=$USB_HOST_BUS,hostport=$USB_HOST_PORT -nographic -net none -hda fat:fatdir/"

This can then be executed as

$ qemu-system-x86_64 $X86_OPTS $COMMON_OPTS

or

$ qemu-system-aarch64 $AARCH64_OPTS $COMMON_OPTS

respectively.

Note: Ordering of some of the above options is important.

Note2: That AARCH64 QEMU_EFI.fd needs to be extended to 64MB in order for QEMU to be happy with it. See this older post for an example.

My usage then looks like the following, after having been dropped into the UEFI shell, with a bunch of overly verbose debug messages in my last compiled version of the driver:

Shell> load fs0:\ChaosKeyDxe.efi

*** Installed ChaosKey driver! ***

Image 'FS0:\ChaosKeyDxe.efi' loaded at 47539000 - Success

Shell> y (0x1D50:0x60C6) is my homeboy!

1 supported languages

0: 0409

Manufacturer: altusmetrum.org

Product: ChaosKey-hw-1.0-sw-1.6.6

Serial: 001c00325346430b20333632

And, yes, those last three lines are actually read from the USB device connected to host QEMU is running on.

Final comments

One thing that is unsurprising, but very cool and useful, is that this works well cross-architecture. So you can test that your drivers are truly portable by building (and testing) them for AARCH64, EBC and X64 without having to move around between physical machines.

Oh, and another useful command in this cycle is reset -s in the UEFI shell, which "powers down" the machine and exits QEMU.

14 October 2023

Gema Gomez

Flipping out zippered case

Speeding up UEFI driver development

While working on the ChaosKey UEFI driver, I once again found myself in a situation of tedium: how to simplify driver development when the target for my device driver is firmware. Moving around a USB key with a FAT filesystem may be a completely valid thing to do in order to install a pre-existing driver. But when you are trying to learn how to write drivers for a new platform (and write blog posts about it), the modify -> compile -> copy -> reboot -> load -> fail -> try again cycle gets quite tedious. As usual, QEMU has/is the answer, letting you pass a USB device controlled by the host over to the guest.

Make sure your QEMU is built with libusb support

Unsurprisingly, in order to support passing through USB device access to guests, QEMU needs to know how to deal with USB - and it does this using libusb. Most Linux distributions package a version of QEMU with libusb support enabled, but if you are building your own version from source, make sure the output from configure contains libusb yes If it does not, under Debian, $ sudo apt-get build-dep qemu should do the trick - anywhere else make sure you manually install libusb v1.0 or later (Debian's libusb-dev package is v0.1, which will not work), with development headers.

Without libusb support, you'll get the error message 'usb-host' is not a valid device model name when following the below instructions.

Identifying your device

Once your QEMU is ready, in order to pass a device through to a guest, you must first locate it on the host.

$ lsusb

...

Bus 008 Device 002: ID 1d50:60c6 OpenMoko, Inc

Bus 008 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub

....

The vendor ID (1d50) of ChaosKey is taken from the OpenMoko project, which has repurposed their otherwise unused device registry space for various community/homebrew devices.

If you want to be able to access the device as non-root, ensure the device node under /dev/bus/usb/bus/device (in this case /dev/bus/usb/008/002) has suitable read-write access for your user.

Launching QEMU

Unlike the x86 default "pc" machine, QEMU has no default machine type for ARM. The "generic virtualized platform" type virt has no USB attached by default, so we need to manually add one. ich-usb-uhci1 supports high-speed, and so is needed for some devices to work under emulation. Using the "virtual FAT" support in QEMU is the easiest way to get a driver into UEFI without recompiling. The example below uses a subdirectory called fatdir which QEMU generates an emulated FAT filesystem from.

USB_HOST_BUS=8

USB_HOST_PORT=2

AARCH64_OPTS="-pflash QEMU_EFI.fd -cpu cortex-a57 -M virt -usb -device ich9-usb-uhci1"

X86_OPTS="-pflash OVMF.fd -usb"

COMMON_OPTS="-device usb-host,bus=usb-bus.0,hostbus=$USB_HOST_BUS,hostport=$USB_HOST_PORT -nographic -net none -hda fat:fatdir/"

This can then be executed as

$ qemu-system-x86_64 $X86_OPTS $COMMON_OPTS

or

$ qemu-system-aarch64 $AARCH64_OPTS $COMMON_OPTS

respectively.

Note: Ordering of some of the above options is important.

Note2: That AARCH64 QEMU_EFI.fd needs to be extended to 64MB in order for QEMU to be happy with it. See this older post for an example.

My usage then looks like the following, after having been dropped into the UEFI shell, with a bunch of overly verbose debug messages in my last compiled version of the driver:

Shell> load fs0:\ChaosKeyDxe.efi

*** Installed ChaosKey driver! ***

Image 'FS0:\ChaosKeyDxe.efi' loaded at 47539000 - Success

Shell> y (0x1D50:0x60C6) is my homeboy!

1 supported languages

0: 0409

Manufacturer: altusmetrum.org

Product: ChaosKey-hw-1.0-sw-1.6.6

Serial: 001c00325346430b20333632

And, yes, those last three lines are actually read from the USB device connected to host QEMU is running on.

Final comments

One thing that is unsurprising, but very cool and useful, is that this works well cross-architecture. So you can test that your drivers are truly portable by building (and testing) them for AARCH64, EBC and X64 without having to move around between physical machines.

Oh, and another useful command in this cycle is reset -s in the UEFI shell, which "powers down" the machine and exits QEMU.

09 September 2023

Gema Gomez

Embroidering quilt labels

Speeding up UEFI driver development

While working on the ChaosKey UEFI driver, I once again found myself in a situation of tedium: how to simplify driver development when the target for my device driver is firmware. Moving around a USB key with a FAT filesystem may be a completely valid thing to do in order to install a pre-existing driver. But when you are trying to learn how to write drivers for a new platform (and write blog posts about it), the modify -> compile -> copy -> reboot -> load -> fail -> try again cycle gets quite tedious. As usual, QEMU has/is the answer, letting you pass a USB device controlled by the host over to the guest.

Make sure your QEMU is built with libusb support

Unsurprisingly, in order to support passing through USB device access to guests, QEMU needs to know how to deal with USB - and it does this using libusb. Most Linux distributions package a version of QEMU with libusb support enabled, but if you are building your own version from source, make sure the output from configure contains libusb yes If it does not, under Debian, $ sudo apt-get build-dep qemu should do the trick - anywhere else make sure you manually install libusb v1.0 or later (Debian's libusb-dev package is v0.1, which will not work), with development headers.

Without libusb support, you'll get the error message 'usb-host' is not a valid device model name when following the below instructions.

Identifying your device

Once your QEMU is ready, in order to pass a device through to a guest, you must first locate it on the host.

$ lsusb

...

Bus 008 Device 002: ID 1d50:60c6 OpenMoko, Inc

Bus 008 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub

....

The vendor ID (1d50) of ChaosKey is taken from the OpenMoko project, which has repurposed their otherwise unused device registry space for various community/homebrew devices.

If you want to be able to access the device as non-root, ensure the device node under /dev/bus/usb/bus/device (in this case /dev/bus/usb/008/002) has suitable read-write access for your user.

Launching QEMU

Unlike the x86 default "pc" machine, QEMU has no default machine type for ARM. The "generic virtualized platform" type virt has no USB attached by default, so we need to manually add one. ich-usb-uhci1 supports high-speed, and so is needed for some devices to work under emulation. Using the "virtual FAT" support in QEMU is the easiest way to get a driver into UEFI without recompiling. The example below uses a subdirectory called fatdir which QEMU generates an emulated FAT filesystem from.

USB_HOST_BUS=8

USB_HOST_PORT=2

AARCH64_OPTS="-pflash QEMU_EFI.fd -cpu cortex-a57 -M virt -usb -device ich9-usb-uhci1"

X86_OPTS="-pflash OVMF.fd -usb"

COMMON_OPTS="-device usb-host,bus=usb-bus.0,hostbus=$USB_HOST_BUS,hostport=$USB_HOST_PORT -nographic -net none -hda fat:fatdir/"

This can then be executed as

$ qemu-system-x86_64 $X86_OPTS $COMMON_OPTS

or

$ qemu-system-aarch64 $AARCH64_OPTS $COMMON_OPTS

respectively.

Note: Ordering of some of the above options is important.

Note2: That AARCH64 QEMU_EFI.fd needs to be extended to 64MB in order for QEMU to be happy with it. See this older post for an example.

My usage then looks like the following, after having been dropped into the UEFI shell, with a bunch of overly verbose debug messages in my last compiled version of the driver:

Shell> load fs0:\ChaosKeyDxe.efi

*** Installed ChaosKey driver! ***

Image 'FS0:\ChaosKeyDxe.efi' loaded at 47539000 - Success

Shell> y (0x1D50:0x60C6) is my homeboy!

1 supported languages

0: 0409

Manufacturer: altusmetrum.org

Product: ChaosKey-hw-1.0-sw-1.6.6

Serial: 001c00325346430b20333632

And, yes, those last three lines are actually read from the USB device connected to host QEMU is running on.

Final comments

One thing that is unsurprising, but very cool and useful, is that this works well cross-architecture. So you can test that your drivers are truly portable by building (and testing) them for AARCH64, EBC and X64 without having to move around between physical machines.

Oh, and another useful command in this cycle is reset -s in the UEFI shell, which "powers down" the machine and exits QEMU.